Recurrent Neural Networks

Humans don’t start their thinking from scratch every second. As you read this essay, you understand each word based on your understanding of previous words

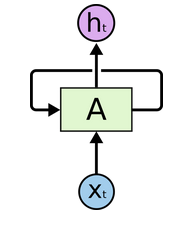

In the Fig.1, a chunk of neural network,A, looks at some inputxtand outputs a valueht. A loop allows information to be passed from one step of the network to the next.

Fig.1 Recurrent Neural Networks have loops.

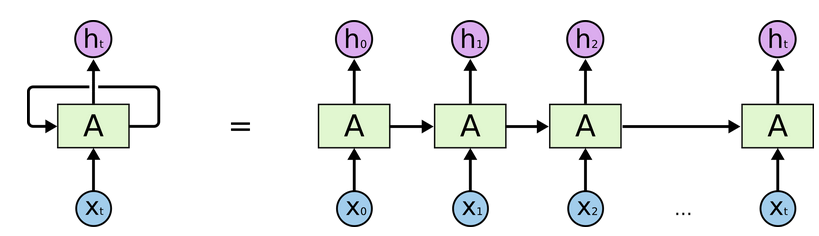

Fig 2 shows a recurrent neural network can be thought of as multiple copies of the same network, each passing a message to a successor. Consider what happens if we unroll the loop:

Fig.2 An unrolled recurrent neural network.

The Problem of Long-Term Dependencies

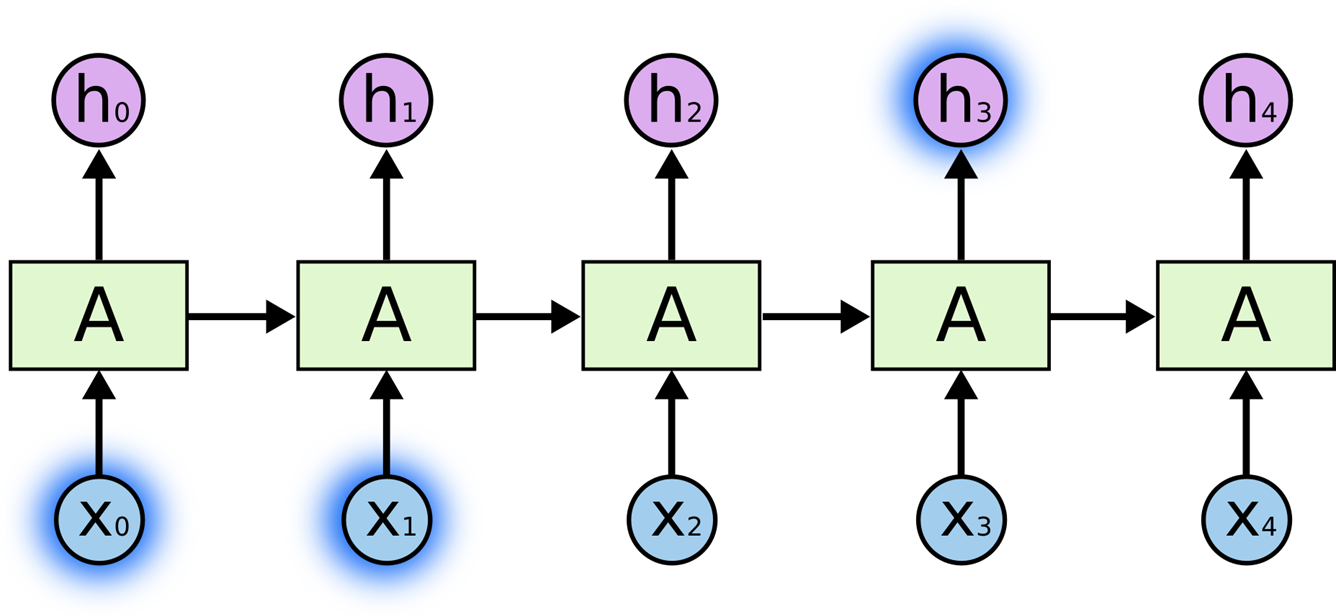

One of the appeals of RNNs is the idea that they might be able to connect previous information to the present task,

For example, if we are trying to predict the last word in “the clouds are in the __,” we don’t need any further context – it’s pretty obvious the next word is going to be sky. In such cases, where the gap between the relevant information and the place that it’s needed is small, RNNs can learn to use the past information.

Fig.3 Theconnection of previous information to the present task

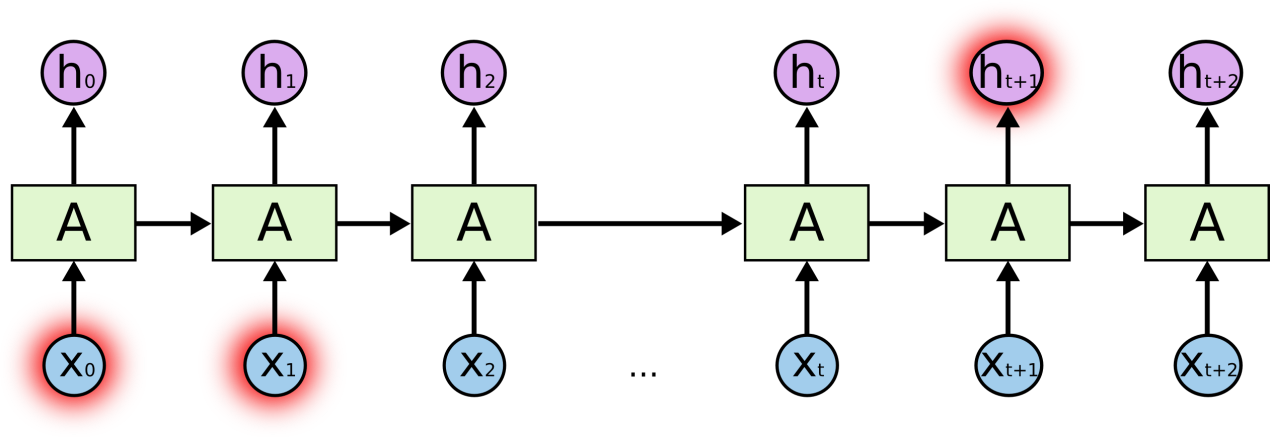

For example, if we are trying to predict the last word in “I grew up in France… I speak fluent___.” Recent information suggests that the next word is probably the name of a language. However, we need the context of France to connect the gape.

As that gap grows, RNNs become unable to learn to connect the information

Fig.4 the connection of each RNN

Language Modelling

Compared to traditional RNN, the LSTMs don’t have the problem showed in Fig.4.

This tutorial shows thathow to train a recurrent neural network on a challenging task of language modeling.

Language modeling is important to many interesting problems such as speech recognition, machine translation, or image captioning.

The probabilistic model assigns probabilities to sentences. To predict next words in a text which is given a history of previous words, the tutorial will use thePenn Tree Bank(PTB) dataset to measuring the quality of these models. The tutorial will reproduce the results fromZaremba et al., 2014(pdf), which achieves very good quality on the PTB dataset

Turorial file

This tutorial references the following files from models/tutorials/rnn/ptbin the TensorFlow models repo:

Download and Prepare the Data

The data required for this tutorial is in the data/ directory of thePTB dataset from Tomas Mikolov's webpage.

The dataset is already preprocessed and contains overall 10000 different words, including the end-of-sentence marker and a special symbol (\<unk>) for rare words

In the file reader.py, the tutorial converts each word to a unique integer identifier, in order to make it easy for the neural network to process the data.

The ModelLong Short Term Memory networks (LSTM)

The core of the model consists of an LSTM cell. The LSTM processes one word at a time and computes probabilities of the possible values for the next word in the sentence

The memory state of the network is initialized with a vector of zeros and gets updated after reading each word.

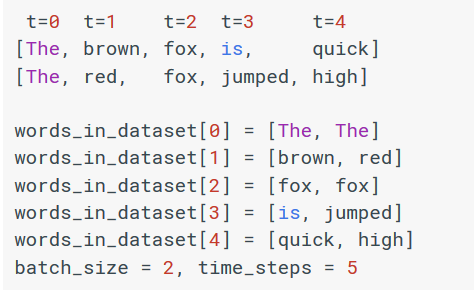

For computational reasons, the tutorial will process data in mini-batches of size batch_size. In this example, it is important to note that current_batch_of_words does not correspond to a "sentence" of words. Every word in a batch should correspond to a time t. TensorFlow will automatically sum the gradients of each batch.

The basic pseudocode is as follows:

Truncated Backpropagation

By design, the output of a recurrent neural network (RNN) depends on arbitrarily distant inputs. However, this makes backpropagation computation difficult. In order to make the learning process tractable, it is common practice to create an "unrolled" version of the network (Fig.2), which contains a fixed number (num_steps) of LSTM inputs and outputs.

(不理解)

The model is then trained on this finite approximation of the RNN.

This can be implemented by feeding inputs of length num_steps at a time and performing a backward pass after each such input block.

The following is a simplified block of code for creating a graph which performs truncated backpropagation:

And this is how to implement an iteration over the whole dataset:

reference: https://www.tensorflow.org/tutorials/recurrent

reference: https://colah.github.io/posts/2015-08-Understanding-LSTMs/